Project Info

Category

Date

Viral Hayli Gubbi Volcano Video Misrepresented as Real Footage

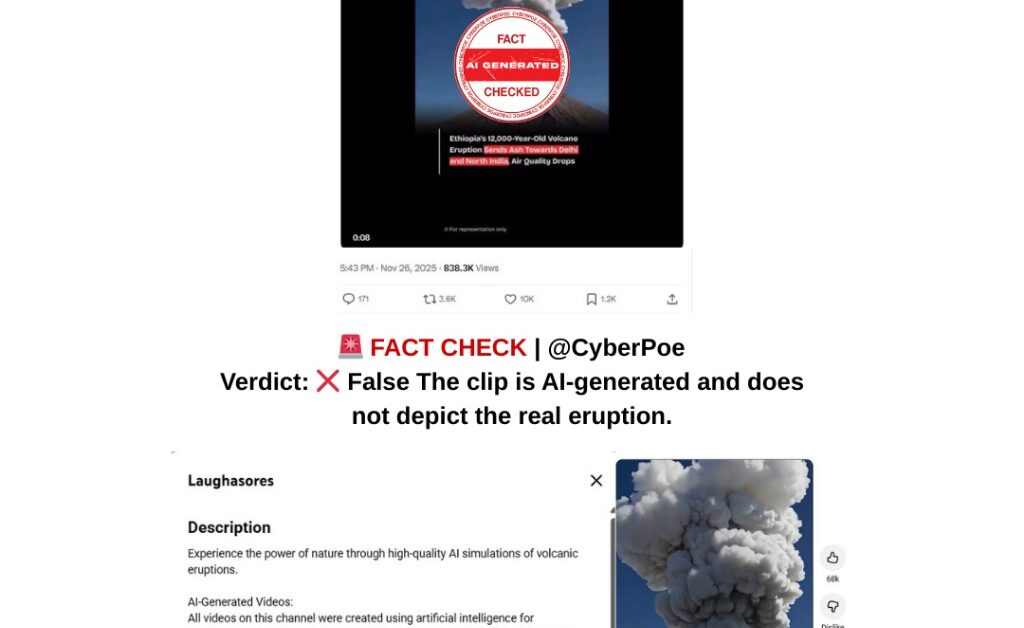

In late November 2025, social media platforms were flooded with a dramatic video allegedly showing the eruption of Ethiopia’s Hayli Gubbi volcano. The footage, which depicts lava spilling down mountainsides and an expanding plume of ash, quickly went viral across X[1], Instagram, Facebook, and Threads. Users claimed the video captured the historic eruption of a volcano dormant for nearly 12,000 years in Ethiopia’s Afar region. Millions engaged with the content, sharing it alongside narratives emphasizing the catastrophic potential of the eruption. While the real eruption did occur on November 23, 2025, careful verification by CyberPoe reveals that the viral video is not authentic and is, in fact, an AI-generated simulation.

The Real Hayli Gubbi Eruption

The Hayli Gubbi volcano[1] erupted in a remote area of Ethiopia’s Rift Valley, a geologically active zone. Satellite imagery and reports from the Ethiopian Geological Survey confirmed that the eruption produced ash clouds rising up to 14 kilometers into the atmosphere, with the wind carrying ash toward Yemen, Oman, India, and northern Pakistan. The eruption caused no immediate casualties due to the volcano’s remote location, but it was a significant geological event given the volcano’s dormancy of nearly twelve millennia. Unlike the viral video, real footage and satellite images show a more contained eruption with ash plumes rising vertically, limited lava flow, and no visually exaggerated, cinematic displays typical of AI-generated simulations.

Examination of the Viral Video

CyberPoe’s analysis revealed several indicators demonstrating that the widely shared video is artificial. A reverse keyframe search traced the clip to a YouTube upload [1]dated July 5, 2025, months before the real eruption. The original uploader explicitly labeled the video as “created using artificial intelligence for entertainment,” confirming it was a simulation rather than live footage. Frame-by-frame forensic review showed classic AI artifacts, including repeating lava textures, impossible ash movements, inconsistent lighting, and anomalous shading around crater edges. A faint watermark reading “for representation only” was embedded in the video, further indicating that the clip was not meant to represent actual events. These characteristics collectively demonstrate that the viral footage is synthetic and was misleadingly presented as real.

Discrepancies with Scientific Data

The discrepancies between the viral video and authentic eruption data are clear. Satellite imagery from Ethiopian monitoring agencies shows ash clouds rising straight up to 14 kilometers, a pattern inconsistent with the sprawling, horizontally moving plume depicted in the AI clip. The lava flows in the viral video are exaggerated, covering terrain far beyond what the eruption produced. Furthermore, the structure of the crater and surrounding geography in the viral footage does not match actual topographic surveys of Hayli Gubbi, highlighting the artificial nature of the visual. The video also lacks temporal alignment; it was uploaded five months prior to the real eruption, making it impossible to depict genuine events.

Motivation for Misinformation

The spread of the AI-generated clip can be attributed to a combination of human curiosity, dramatic visuals, and the tendency to conflate viral content with reality. As news of the real eruption broke, users re-shared the AI simulation without context, assuming it was authentic. The visually striking footage, paired with sensational captions, amplified engagement and created a false narrative of a catastrophic eruption far more dramatic than reality. Misattribution of AI-generated content to real natural disasters is an increasingly common form of digital misinformation, particularly during times of heightened public interest in environmental and geological events.

Impact and Verification

The circulation of this video illustrates the importance of careful media verification. While the Hayli Gubbi eruption was real, misattributed visuals can distort public understanding and provoke unnecessary alarm. CyberPoe recommends that audiences verify media sources, check for original publication dates, and consult scientific or governmental releases when assessing footage of natural disasters. In this case, independent satellite imagery, Ethiopian Geological Survey reports, and official communications all confirmed the actual eruption’s scale and pattern, none of which align with the viral AI-generated clip.

Conclusion

In conclusion, the viral video purporting to show Ethiopia’s Hayli Gubbi volcano erupting in November 2025 is not genuine. It is an AI-generated simulation created for entertainment purposes and predates the real eruption by several months. Although the Hayli Gubbi eruption itself was significant, the viral clip misrepresents the event and contributes to misinformation by exaggerating the scale and visual intensity. CyberPoe rates this claim as false and misleading, highlighting the need for vigilance in verifying digital content, particularly when AI-generated media can easily be mistaken for authentic footage. Audiences are advised to rely on verified satellite imagery, official geological reports, and credible media outlets to understand real-world natural disasters, rather than engaging with sensationalized AI content that has been misattributed.

CyberPoe | The Anti-Propaganda Frontline 🌍