Project Info

Category

Date

AI-Generated Video Falsely Portrays Iranian Protesters Illuminating Streets with Phone Lights

Background of the Viral Claim

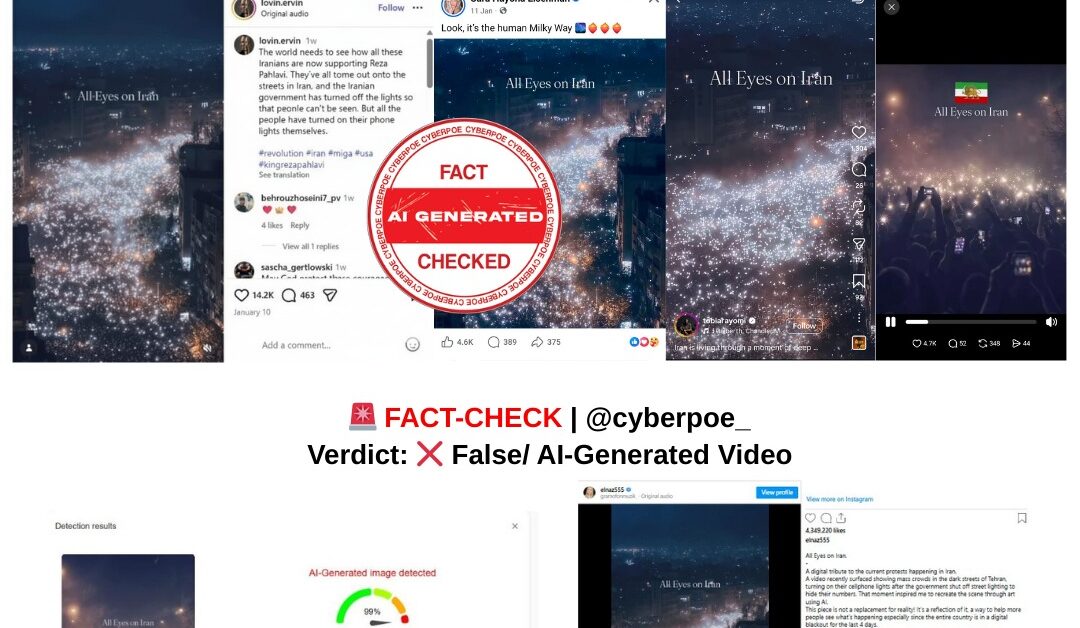

In January 2026, as protests spread across Iran under one of the most restrictive information blackouts the country has experienced in years, a visually arresting video began circulating widely on social media. The footage appeared to show massive crowds of Iranian protesters marching through darkened streets at night, holding up their mobile phones with flashlights turned on. Captions accompanying the clip claimed that Iranian authorities had deliberately shut off street lighting and electricity to conceal the demonstrations, forcing protesters to light their way using phone torches. Shared across X,[1] Instagram,[2] Facebook,[3] and Thread [4] in multiple languages, the video was framed as rare, courageous footage breaking through state censorship.

In an environment where access to verified images from Iran was severely limited, many users accepted the clip as authentic documentation. The imagery resonated deeply with audiences abroad, reinforcing perceptions of a population resisting repression under extreme conditions and amplifying calls for international attention.

Context of the Real Protests in Iran

The protests themselves were real and significant. Beginning in December 2025, demonstrations erupted across Iran in response to worsening economic pressures, rising food prices, currency devaluation, and long-standing political grievances.[1] As the movement grew, it expanded beyond economic demands into open challenges to Iran’s theocratic system. The state responded forcefully, imposing widespread internet restrictions, limiting foreign media access, and disrupting domestic communications.

Human rights organizations documented a severe crackdown. According to the Human Rights Activists News Agency, more than 4,000 deaths were linked to protest-related violence, while Iran Human Rights warned that the actual toll could be substantially higher due to reporting constraints.[2] This climate of repression created an acute information vacuum, making audiences particularly vulnerable to emotionally compelling visuals presented as eyewitness documentation.

CyberPoe’s Verification and Initial Findings

CyberPoe initiated a verification process combining visual forensic analysis, expert review, and AI-detection tools. While the video appeared convincing at first glance, closer examination revealed multiple inconsistencies incompatible with real-world footage. These findings prompted deeper analysis into the clip’s origin and production method.

Frame-by-frame inspection showed irregularities in crowd movement, lighting behavior, and physical interactions between people and their environment. These anomalies raised immediate red flags, particularly given the consistency with known artifacts of AI-generated video.

Visual and Technical Indicators of AI Generation

Experts specializing in artificial intelligence and computer vision identified several telltale signs of synthetic media. Human figures in the video appeared to merge, blur, or disappear briefly, while hands and arms occasionally formed without coherent anatomy. The lighting from mobile phones behaved unnaturally, failing to cast consistent reflections on nearby windows, vehicles, or pavement. In real nighttime footage, such reflections are unavoidable; their absence strongly suggested algorithmic generation rather than camera capture.

To corroborate visual analysis, CyberPoe examined still frames using the InVID-WeVerify[1] verification plugin, developed with support from AFP. The tool detected indicators consistent with AI-generated content, reinforcing the conclusion that the footage was not authentic.

[1] https://www.invid-project.eu/tools-and-services/invid-verification-plugin/

Source Identification and Creator Confirmation

Further investigation traced versions of the video to the Instagram account @elnaz555.[1] The account belongs to Elnaz Mansouri, a multidisciplinary artist known for producing AI-generated visual work. When contacted, Mansouri confirmed that she created the video using artificial intelligence. She explained that the clip was intended as a symbolic or artistic reflection of the situation in Iran during the blackout, not as documentary evidence of a real protest.

While Mansouri disclosed the AI-generated nature of the video on her own platforms, that context was lost as the clip spread. Once separated from its original explanation, the video was widely reshared as factual footage, gaining credibility through repetition rather than verification.

How the Misinformation Took Hold

The video’s success as misinformation illustrates a broader dynamic in modern information warfare. In moments of crisis, audiences seek visuals to anchor abstract reports of violence and repression. When authentic imagery is scarce, synthetic media can easily fill the gap. The emotional realism of AI-generated visuals allows them to circulate unchecked, especially when they align with existing beliefs or fears. The reuse of familiar activist language, such as the phrase “All Eyes on Iran,” further blurred the boundary between symbolic advocacy and factual documentation. Similar slogans have appeared in previous global campaigns that also relied on AI-generated imagery, conditioning audiences to associate such visuals with real events.

Why This Matters

AI-generated protest imagery poses a serious challenge to public understanding. Even when created with expressive or advocacy-driven intent, such content can distort reality when presented as evidence. It risks undermining legitimate reporting, confusing audiences, and ultimately weakening trust in genuine documentation of human rights abuses.

In highly restricted environments like Iran, the credibility of real eyewitness accounts is already under strain. Mixing authentic suffering with fabricated visuals can unintentionally discredit real victims and complicate efforts to hold authorities accountable.

CyberPoe Conclusion

The viral video depicting Iranian protesters lighting streets with mobile phone flashlights is not real footage from Iran. It was generated using artificial intelligence, a conclusion confirmed through visual analysis, AI-detection tools, and direct confirmation from the creator. While protests in Iran are real, widespread, and deadly, this particular clip does not document an actual event.

The case highlights how synthetic media can exploit information blackouts and emotional urgency, spreading faster than verification can follow. In an era of increasingly realistic AI visuals, critical scrutiny of sources, context, and technical markers is essential.

CyberPoe | The Anti-Propaganda Frontline 🌍