Project Info

Category

Date

AI-Manipulated Image Falsely Presented as a High-Resolution Frame of the Alex Pretti Shooting in Minneapolis

The Viral Claim and Its Emotional Impact

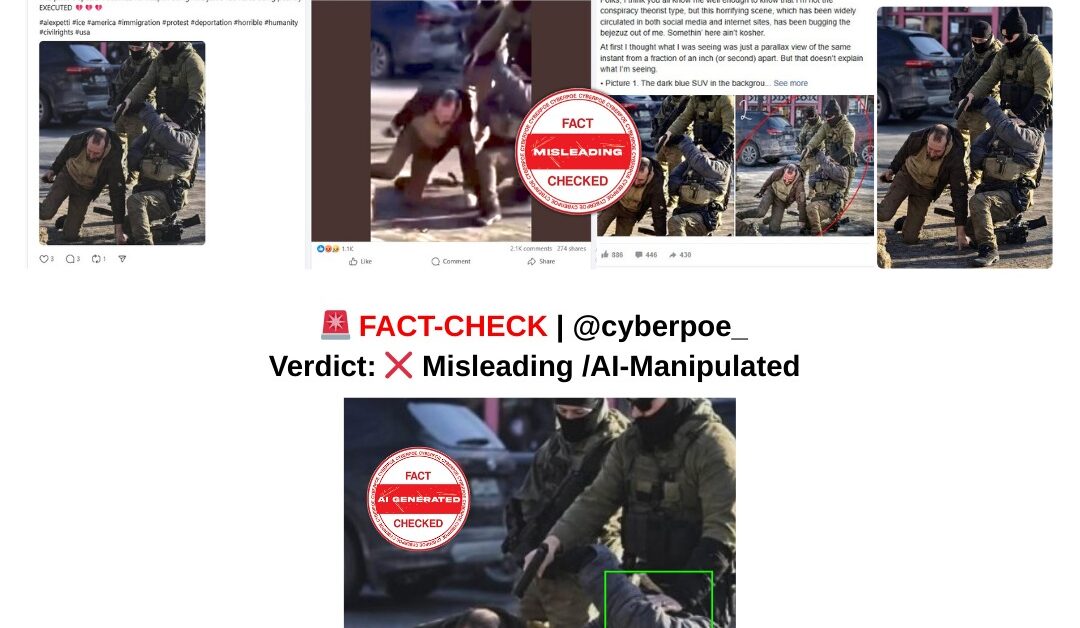

In the days following the fatal shooting of 37-year-old intensive care nurse Alex Pretti[1] by U.S. federal immigration agents in Minneapolis, social media was flooded with images and videos purporting to show what happened during the encounter. Among these visuals, one image rapidly gained traction across Facebook,[2] X,[3] and Threads.[4] It was presented as a sharp, high-resolution freeze frame allegedly captured directly from video footage of the shooting itself. The caption accompanying the image insisted it represented definitive proof of the moment Pretti was killed, with some users demanding it be placed on the cover of Time magazine as a symbol of state violence.

The image shows multiple armed agents standing over a kneeling man identified as Pretti, with a firearm aimed toward his head. Compared with previously circulating footage, which was grainy and filmed from a distance, this image appeared unusually clear and detailed. That perceived clarity gave it authority. Supporters of accountability shared it as evidence of excessive force,[5] while others used the same image to argue the opposite, claiming it showed Pretti reaching for a weapon. In both cases, the image became central to competing narratives about what actually occurred.

Context of the Minneapolis Shooting

The shooting took place on January 24, 2026, amid heightened tensions in Minneapolis following President Donald Trump’s expansion of federal immigration enforcement operations in the city.[1] According to the Department of Homeland Security, Pretti posed an immediate lethal threat and intended to use a firearm he was legally licensed to carry.[2] This official account was quickly challenged.

Independent investigations by organizations including Bellingcat and The New York Times analyzed verified bystander footage and concluded that Pretti never drew his weapon and appeared to have been disarmed before shots were fired. This discrepancy echoed an earlier case from January 7, when another Minneapolis resident, Renee Good, was killed by an ICE agent under circumstances later contradicted by video evidence. In both cases, visual material became the battleground on which public understanding of events was shaped.

Why the Circulating Image Is Not Authentic

CyberPoe’s verification began with reverse-image searches and frame comparisons. These revealed that the viral still closely matches a specific angle from authentic video footage of the Pretti incident that had already been publicly verified.[1] That original footage is low-resolution and heavily pixelated, consistent with a handheld recording captured under chaotic conditions. The viral image does not originate from a separate camera or higher-quality source. Instead, it appears to be an “enhanced” reconstruction of an existing frame.

Close visual inspection exposes multiple distortions that cannot be explained by camera limitations. One of the most striking anomalies is an agent kneeling beside the figure identified as Pretti who appears to be missing his head entirely, a clear anatomical impossibility. Another officer’s leg bends at an unnatural angle near an indistinct object, suggesting the system generating the image failed to maintain realistic human proportions. These errors are characteristic of AI-generated or AI-augmented imagery, not of photographic blur or compression.

The object in Pretti’s right hand is another critical inconsistency. In the manipulated image, the item is amorphous and indistinct, leading some viewers to interpret it as a firearm. Verified footage, however, clearly shows Pretti holding a mobile phone in that hand. This discrepancy illustrates how AI enhancement tools often “hallucinate” details that were never present, filling gaps in low-quality footage with invented visual information.

Expert Confirmation of AI Manipulation

The conclusion that the image was artificially altered is supported by expert analysis. Hany Farid, professor at the University of California, Berkeley’s School of Information and co-founder of the GetReal Security lab, reviewed the image and confirmed that it is an AI-enhanced still derived from real video footage.[1] On January 26, Farid explained that such enhancement tools do not simply recover lost detail. Instead, they infer what might be present based on patterns learned from training data.

This process can produce visuals that appear more realistic and authoritative than the original material while quietly introducing false elements. In legal and investigative contexts, these hallucinated details can be deeply misleading, especially when viewers are unaware that enhancement has occurred.

The Broader Implications of AI-Altered Evidence

The circulation of AI-manipulated imagery in cases involving police or federal use of force poses a serious threat to public understanding. High-resolution images are instinctively trusted, even when they are synthetic. In emotionally charged cases like the Pretti shooting, such visuals can inflame anger, distort legal debates, and entrench false narratives before facts are fully established.

As AI tools become more accessible, the line between enhancement and fabrication continues to blur. Images presented as clarifying evidence may instead obscure reality, replacing uncertainty with false certainty. This dynamic risks undermining legitimate accountability efforts by contaminating the evidentiary record with altered visuals.

CyberPoe Conclusion

The image presented as a high-resolution freeze frame of the Alex Pretti shooting is not authentic. It is an AI-enhanced manipulation derived from low-quality video footage, containing fabricated anatomical details and misleading visual cues that materially alter what is shown. It does not represent an accurate or reliable depiction of the incident.

CyberPoe | The Anti-Propaganda Frontline 🌍