Project Info

Category

Date

AI-Generated Image Falsely Linked to Deadly Thailand Crane Collapse

Overview of the Viral Claim

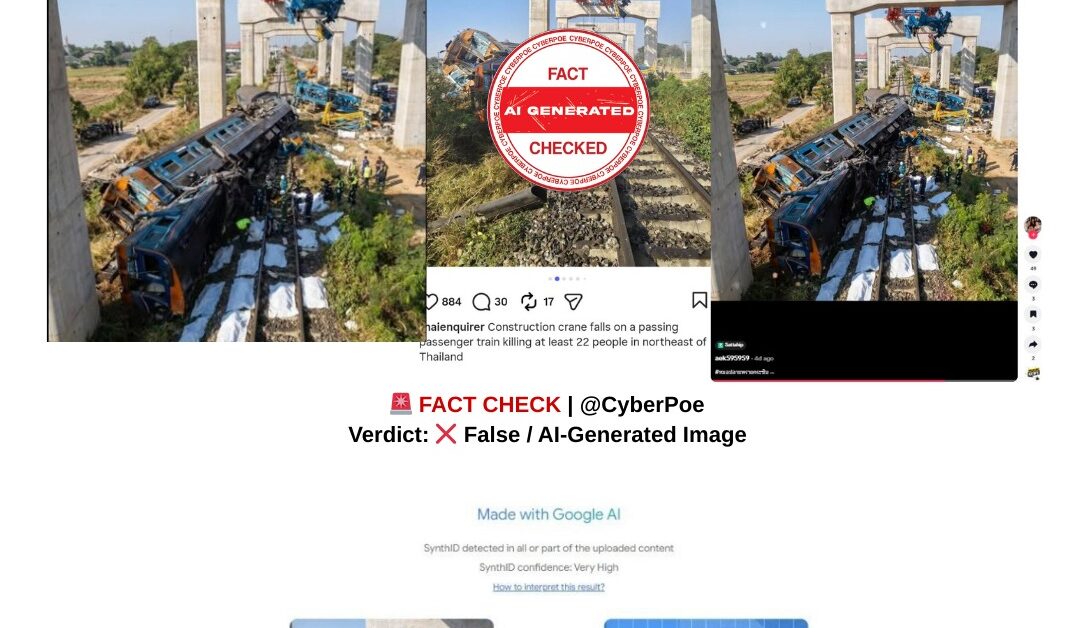

In mid-January 2026, shortly after Thailand was shaken by a deadly construction accident in Nakhon Ratchasima province, a disturbing image began circulating widely across social media platforms. Shared primarily on Facebook[1] in Thai-language posts and later amplified on TikTok[2] and Instagram,[3] the image appeared to show a passenger train torn apart on railway tracks, with multiple bodies covered in white sheets laid out in rows. Captions accompanying the image claimed it documented the immediate aftermath of a crane collapse that struck a passing train, killing dozens of passengers. As public attention focused intensely on the tragedy, the image was widely accepted as an authentic visual record of the disaster and spread rapidly, often paired with emotional messages mourning the victims.

The timing of the image’s emergence played a significant role in its credibility. With news breaking of at least 32 deaths and many injuries, audiences were primed to believe graphic visuals were finally surfacing from the scene. In such moments, emotionally charged imagery can feel like confirmation rather than a claim requiring verification.

The Reality of the January 2026 Accident

The real incident occurred on January 14, 2026, when a construction crane collapsed onto a passenger train traveling through an area where a high-speed rail bridge was under construction.[1] The project, valued at approximately five billion dollars, is part of Thailand’s broader rail expansion and has been developed with Chinese support. The contractor, Italian-Thai Development, is one of the country’s largest construction firms and has previously faced scrutiny over safety practices following a series of fatal incidents.

This specific crash reignited national debate over construction oversight, worker safety, and regulatory enforcement. It also came in the shadow of earlier controversies, including a 2025 case in which the same company and its director were indicted alongside numerous others after a Bangkok high-rise collapsed during an earthquake, killing around 90 people, most of them construction workers. Against this backdrop, public demand for accountability was intense, making any imagery claiming to show the consequences of the latest disaster especially potent.

CyberPoe’s Verification Process

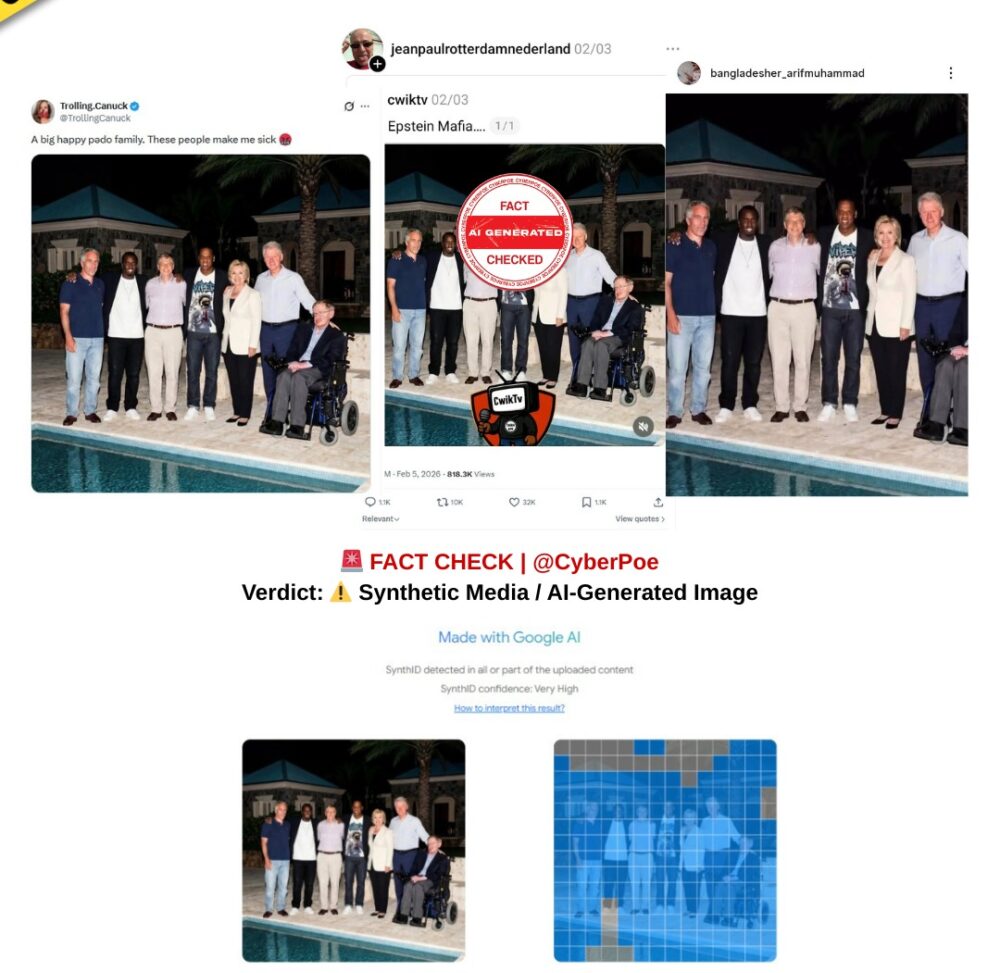

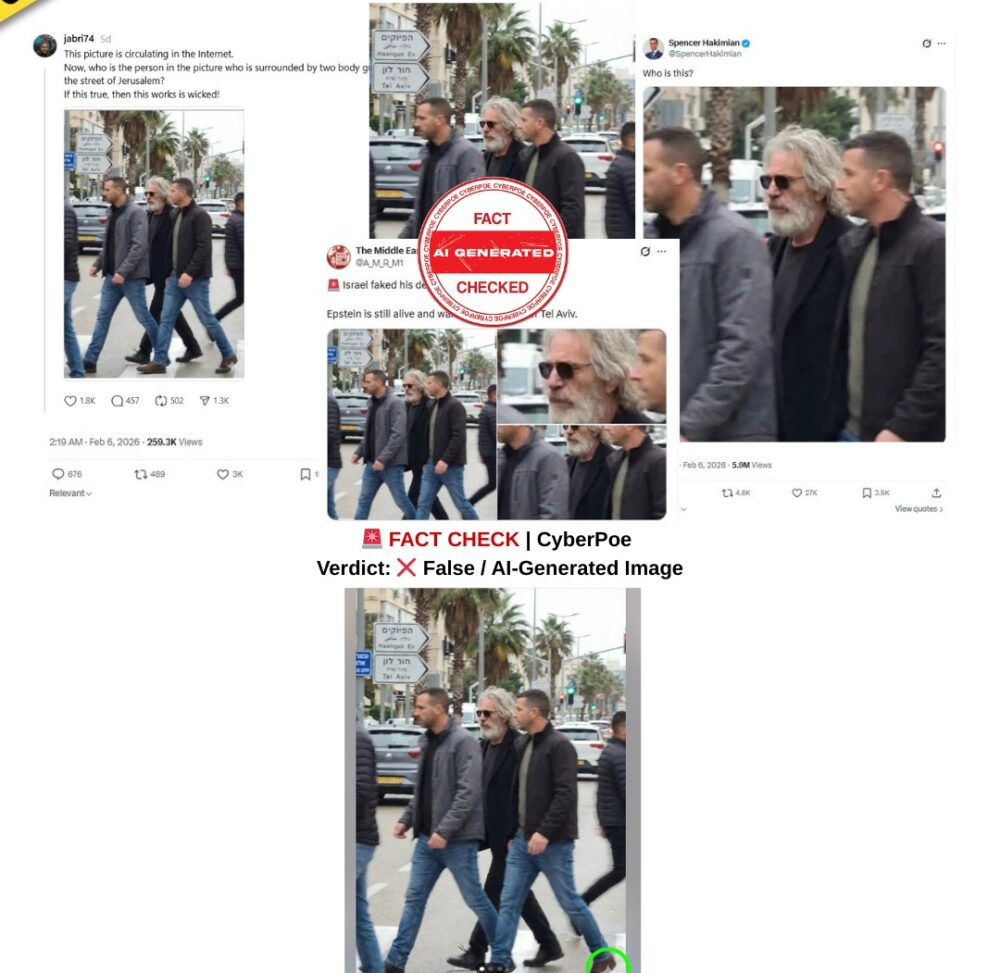

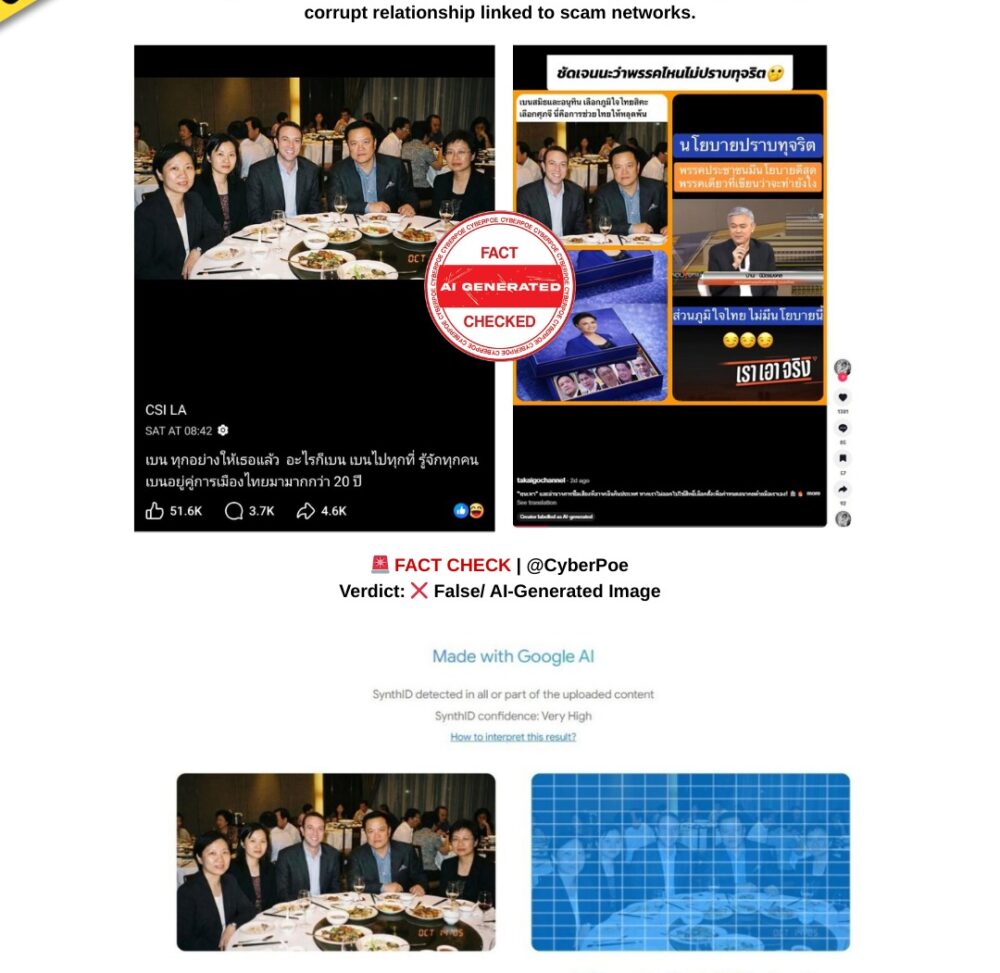

CyberPoe conducted a comprehensive verification of the circulating image using multiple methods. Reverse-image searches were performed, followed by technical analysis with AI-detection tools and direct comparison with verified photographs taken by professional journalists at the crash site. This process established that the image was not an authentic photograph from the January 2026 accident.

Google’s “About this image” feature identified the picture as being labeled “Made with Google AI.” Further confirmation came from Google’s SynthID[1] detector, a system specifically designed to identify content generated using Google’s artificial intelligence tools. The detector returned a “very high” confidence rating that the image was AI-generated. This technical evidence alone is sufficient to disqualify the image as genuine documentation of the Thailand crash.

Visual and Structural Inconsistencies

Beyond AI-detection markers, close visual inspection reveals inconsistencies that further undermine the claim. Official reporting confirmed that the train involved in the accident was Special Express Train No. 21 on the Bangkok–Ubon Ratchathani route. The rolling stock used for this service has a specific carriage design, including the placement of doors and windows.

In the viral image, a door appears in the middle of a carriage, a feature that does not exist on the actual model used on that route. This structural error indicates the image was generated without accurate reference to the real train involved.

The depiction of casualties also contradicts verified reporting. The AI-generated image shows bodies covered with white cloths arranged directly on the railway tracks. Journalists who documented the scene confirmed that bodies were not laid out in this manner, and verified photographs from the site bear no resemblance to the orderly yet graphic arrangement shown in the viral image. The overall composition reflects a synthetic interpretation of disaster imagery rather than a real scene.

How the Image Gained Traction

The image spread rapidly across platforms during a moment of intense public emotion. In the early hours of breaking news, official images were limited, creating an information vacuum. The AI-generated image filled that gap, offering a shocking visual narrative that aligned with public expectations of a catastrophic event. Its circulation was further accelerated when it appeared in content shared by online outlets before verification, demonstrating how quickly unvetted visuals can cross from social media into broader information spaces.

Why This Matters

The use of AI-generated images to represent real-world tragedies poses serious risks. Such fabrications mislead the public, disrespect victims and their families, and distort understanding of events that may still be under investigation. In cases involving potential negligence or criminal liability, false visuals can muddy the evidentiary landscape and distract from verified facts.

As generative AI tools become more accessible and more realistic, the likelihood of fabricated disaster imagery appearing during breaking news will only increase. This makes verification of source, context, and technical origin not optional, but essential.

CyberPoe Conclusion

The image claiming to show the aftermath of the January 2026 crane collapse onto a passenger train in Thailand is not real. It was generated using artificial intelligence and contains clear technical and visual inconsistencies when compared with verified reporting and on-scene photography. Presenting it as authentic disaster imagery strips away context and spreads misinformation at a moment of national grief.

CyberPoe | The Anti-Propaganda Frontline 🌍