Project Info

Category

Date

False Claim of Paid Protesters Fueled by AI-Generated Video

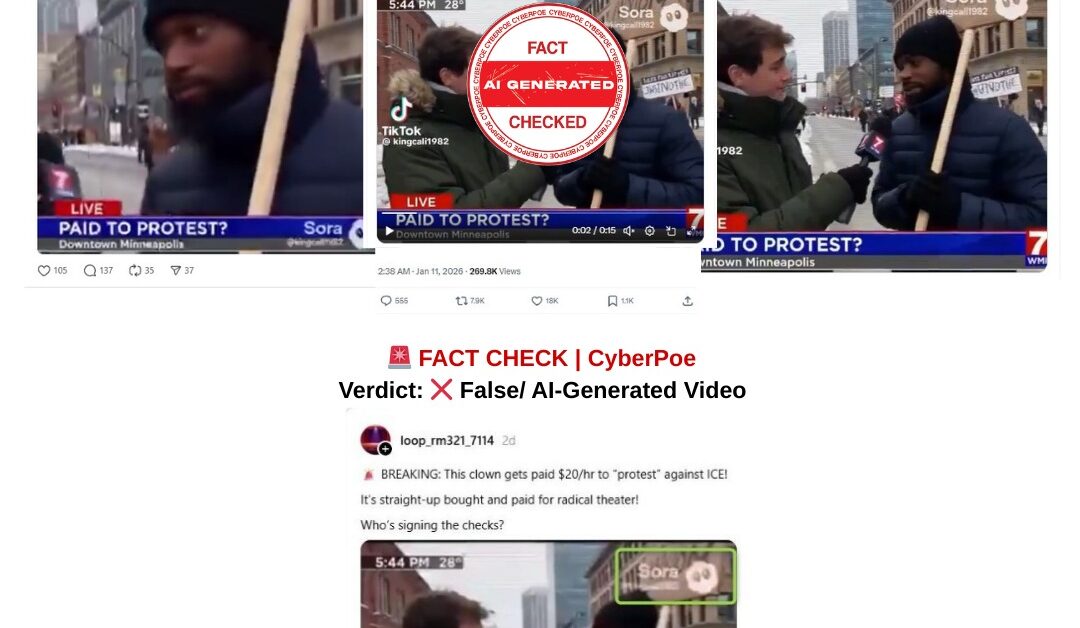

In the volatile aftermath of the January 7, 2026 shooting involving a U.S. Immigration and Customs Enforcement agent in Minneapolis,[1] a new strand of misinformation rapidly took hold online. A short video clip, styled to resemble a televised street interview, began circulating widely across X,[2] Threads,[3] and other platforms.[4] In the clip, a man identified as an anti-ICE protester appears to admit on camera that he is being paid twenty dollars per hour to participate in demonstrations against immigration enforcement. Shared amid heightened emotions and political polarization, the video was framed as supposed proof that public anger over the shooting was manufactured rather than genuine. CyberPoe’s investigation confirms this claim is entirely false. The video is not authentic footage from Minneapolis but a piece of synthetic media generated using artificial intelligence.

How the Narrative Took Shape Online

The video gained traction because it fit neatly into an existing narrative promoted by some political actors: that protests critical of immigration enforcement are staged, funded, or orchestrated rather than organic expressions of public concern. The clip’s presentation mimicked the visual language of local news reporting, complete with a handheld microphone, lower-third graphics, and a brief on-the-street exchange. In moments of crisis, such visual cues often lower skepticism, especially when audiences are already primed to distrust protest movements. As the video spread, captions stripped it of context, presenting it as candid evidence rather than questioning its origin. Within hours, it was being cited as justification to dismiss the Minneapolis demonstrations as illegitimate.

Artificial Origins and Digital Forensics

CyberPoe’s verification process quickly revealed that the footage was not recorded in the real world. A key indicator lies directly within the video itself: a floating watermark associated with OpenAI’s Sora,[1] a text-to-video generation tool. This watermark is a built-in marker signaling that the content was synthetically created. Its presence alone is sufficient to disprove claims of authenticity. Beyond the watermark, further forensic review exposed structural inconsistencies common in AI-generated video, including unnatural facial movements, inconsistent lighting, and background elements that fail to maintain spatial coherence across frames. These artifacts are not subtle to trained analysts and clearly indicate the clip was produced by an algorithm rather than a camera.

The Fictional News Outlet Problem

Another decisive red flag is the supposed television channel shown in the video. The microphone flag and on-screen chyron display the name “WMIN 7,” presented as a local Minneapolis broadcaster. No such station exists. There is no licensed television outlet, cable channel, or digital newsroom operating under that name in Minnesota or elsewhere in the United States. Fabricated media branding is a recurring feature in AI-generated misinformation, designed to evoke credibility without being easily traceable to a real organization that could dispute the content. The absence of any record of “WMIN 7” further dismantles the claim that the video represents legitimate journalism.

Contextual Errors That Break the Illusion

Beyond technical markers, the video also collapses under basic contextual scrutiny. The timestamp displayed on screen reads 5:44 pm, yet the scene is bathed in daylight. In Minneapolis in early January, sunset occurs well before that time, and any outdoor footage would show darkness or deep twilight. Protest signs visible in the background contain garbled, nonsensical text that does not correspond to real slogans used at Minneapolis demonstrations. These elements reflect known limitations of current AI video models, which often struggle with accurate language rendering and real-world environmental consistency.

Tracing the Source of the Fabrication

CyberPoe traced the earliest known upload of the video to a TikTok account operating under the handle @kingcali1982.[1] The account posted the clip on January 10, 2026,[2] and notably included a label indicating that the content “contains AI-generated media.” This account has a documented history of publishing AI-created political satire and fabricated interview scenarios. While the original upload acknowledged its synthetic nature, that context was lost as the video migrated across platforms. Screenshots, cropped reposts, and re-uploads removed both the disclosure and the watermark, allowing the clip to be reframed as authentic evidence by third-party accounts.

Reality of the Minneapolis Protests

Verified reporting from established outlets paints a very different picture of events in Minneapolis following the January 7 shooting of Renee Nicole Good. Journalists on the ground documented demonstrations as largely peaceful, driven by community grief and demands for accountability. There is no credible evidence, financial record, or investigative reporting suggesting that protesters were being paid to attend. The narrative promoted by the AI-generated video directly contradicts verified observations and serves to delegitimize lawful public dissent.

CyberPoe Conclusion

The viral video claiming to show a paid anti-ICE protester in Minneapolis is entirely synthetic. It was generated using AI tools, distributed with misleading framing, and weaponized to erode trust in public protest movements during a sensitive moment. This case illustrates a growing disinformation threat: the use of artificial intelligence to create emotionally persuasive but completely false “evidence.” As AI-generated media becomes more accessible, the burden of verification becomes heavier, especially during breaking news events. CyberPoe’s findings underscore a critical reality of the digital age: not everything that looks real is real, and skepticism is no longer optional but essential

CyberPoe | The Anti-Propaganda Frontline