Project Info

Category

Date

Nigerian influencer Martins Vincent Otse, aka VeryDarkMan (VDM),

🚨 FACT-CHECK | @CyberPoe_ CLAIM:

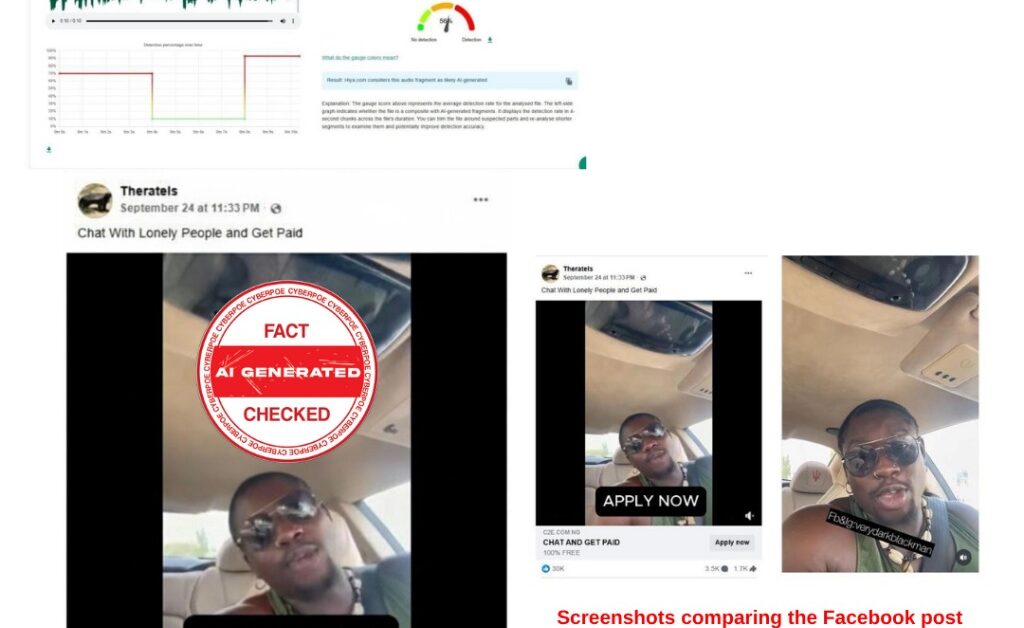

A viral Facebook video circulating since late September 2025 appears to show Nigerian social media influencer Martins Vincent Otse, popularly known as VeryDarkMan (VDM), endorsing a website that allegedly pays people up to $1,000 per month to chat with lonely men and women. The clip, lasting just over ten seconds, quickly gained traction across Facebook, Instagram, and TikTok, prompting thousands of shares and comments. The video depicts VDM in what seems like a casual setting, claiming that viewers can “make $1,000 monthly chatting lonely people” and urging them to click a link to apply. However, a detailed forensic analysis by CyberPoe reveals that the footage is not authentic. It is a digitally manipulated deepfake, created using artificial intelligence to impersonate the influencer’s face and voice for a fraudulent scam campaign.

THE ORIGIN OF THE CLIP

The video was first shared on September 24, 2025, by a Facebook account named “TheRatel”, which falsely appeared to be affiliated with VDM’s fan community. The post linked to a Linktree page offering so-called “chat job” opportunities and “visa sponsorships,” a common front for phishing scams. When users clicked the link, they were redirected to multiple domains filled with fake offers promising instant earnings or overseas employment. The domain network showed all the hallmarks of clickbait scam operations auto-generated content, recycled thumbnails, and deceptive landing pages meant to harvest personal data. Further analysis revealed that the Facebook page was newly created on September 21, 2025, just days before the video appeared, strongly suggesting a coordinated scam campaign designed to exploit VDM’s growing online popularity.

THE REAL SOURCE VIDEO

A reverse image search and metadata analysis traced the footage back to March 7, 2024, when VDM posted a completely unrelated video on his verified Instagram account. In that original post, he addressed false rumours circulating online about his alleged purchase of luxury homes in his hometown. Nowhere in the original video does he mention “chat jobs,” “money-making offers,” or “lonely people.” The viral version, therefore, is a spliced and re-voiced imitation a hallmark of modern deepfake technology. In the doctored version, the original audio has been entirely replaced, and the video’s frame rate manipulated to make the mouth movements appear to match the fabricated dialogue.

TECHNICAL ANALYSIS OF THE DEEPFAKE

Close examination of the viral clip shows multiple visual and auditory inconsistencies. The lip-sync pattern is uneven, with minor but visible desynchronization between speech and mouth movement a telltale sign of AI voice synthesis. The facial texture also flickers around the jawline and teeth area, an artifact of deepfake rendering tools that struggle with fine detail under changing light conditions. When the clip’s audio was tested using Hiya, a trusted AI content detection platform, it was flagged as “likely AI-generated” due to synthetic tone modulation and pitch uniformity. Together, these findings conclusively prove that the clip was digitally altered using generative AI, not recorded by the influencer himself.

THE SCAM STRUCTURE BEHIND THE VIDEO

The scam network promoting this fake clip appears to rely on a chain of click-farm websites masquerading as job portals. Each redirect on the Linktree leads to domains promoting “scholarships,” “visa jobs,” and “international work-from-home offers.” Such networks typically lure users into submitting personal information, which is later used for identity theft, spam advertising, or paid traffic monetization. In many cases, these scams are orchestrated from anonymous foreign domains and operate under rotating names to avoid detection by social platforms. By using AI-generated deepfakes of celebrities like VDM, the operators exploit trust and familiarity to enhance the scam’s credibility.

CONTEXT AND IMPACT

Deepfake scams have become one of the most alarming trends in the digital misinformation ecosystem. Across Africa, fake videos featuring popular figures from actors and influencers to politicians have been used to promote everything from bogus investments to cryptocurrency fraud. Victims often fall prey because the visuals appear so realistic that even seasoned internet users mistake them for genuine endorsements. The case of the VeryDarkMan deepfake highlights how AI manipulation technologies are now weaponized to amplify fraudulent schemes. It also underscores the urgent need for stronger digital literacy, fact-checking mechanisms, and AI regulation frameworks to protect online communities from financial and reputational harm.

CONCLUSION

The viral video claiming to show VeryDarkMan promoting a $1,000-per-month chat job is entirely false. It is a fabricated deepfake created through AI-driven face and voice synthesis to promote fraudulent links and scam networks. The original footage was taken from a March 2024 Instagram video that had nothing to do with jobs or online earning schemes. Verified sources, including the original post and AI forensic testing, confirm that the viral clip is digitally altered and deceptive. This incident serves as another reminder that AI-generated misinformation can appear convincing and that users must always verify celebrity endorsements through official accounts and credible fact-checking platforms before clicking or sharing such content.

SOURCE VERIFICATION:

- Original Instagram video (March 7, 2024)

- Hiya AI-Detection Report (October 2025)

- Reverse Image and Metadata Cross-Check (October 2025)