Project Info

Category

Date

The Viral “CCTV Islamic Indoctrination Video”: What’s Real and What’s Manufactured

The Claim

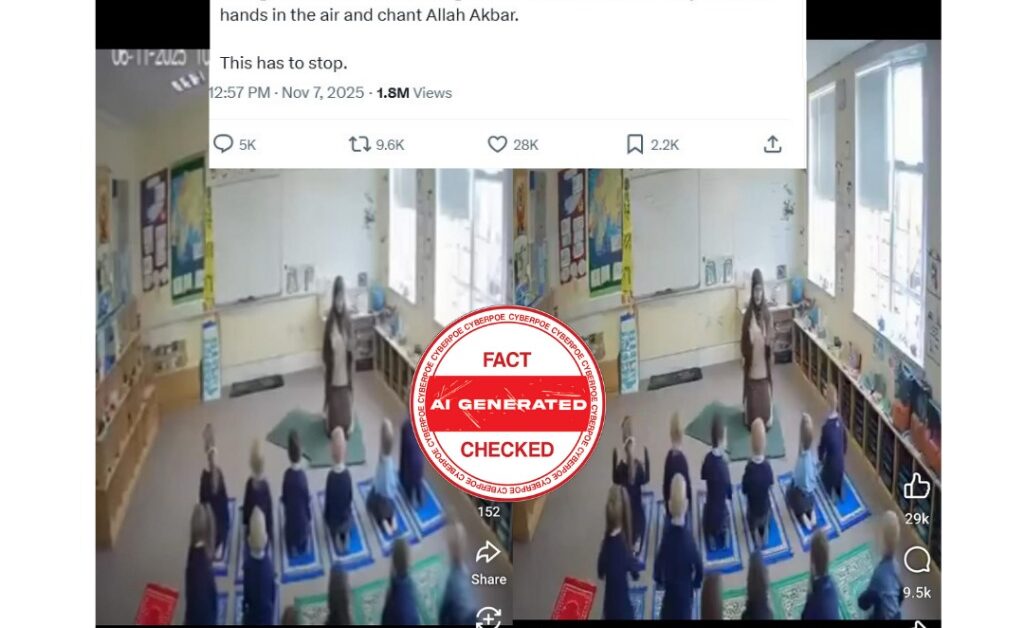

A short 15-second clip is circulating across Western social platforms, presented as CCTV footage from an elementary school classroom. It depicts a hijab-wearing woman appearing to guide white schoolchildren as they kneel on prayer mats and chant “Allahu Akbar” and “Subhan Allah al-A’la.” The posts spreading it frame the scene as proof of “Islamic indoctrination” inside Western schools and claim the video exposes a growing, hidden religious agenda. The narrative gained traction quickly because it leverages ongoing culture-war anxieties and plays directly into pre-existing fears about migrant communities and changing classroom environments.

CyberPoe’s Verification

After reviewing the footage and consulting two independent AI-forensics analysts, CyberPoe found that the video does not hold up to even basic scrutiny. The clip behaves like a piece of synthetic media designed to mimic the aesthetic of classroom surveillance rather than display an authentic event. The supposed teacher visually collapses into the background whenever she shifts position, as if sitting on a chair that never existed in the scene. Her facial features deform subtly each time she turns her head a known artifact associated with frame-to-frame generative smoothing failures. Several children exhibit elongated or distorted head shapes, especially those closest to the camera, while classroom posters and wall décor shift location within milliseconds, something genuine CCTV footage cannot physically replicate.

The audio behaves in an equally artificial manner. The voices have an overly compressed metallic texture usually found in low-tier AI voice synthesis, and the acoustic field lacks the natural environmental variation typical of a real classroom. There is no ambient chatter, no distant hallway noise, no reverberation from the walls only a flat, sterile layer of sound that exposes its synthetic origin. Not a single educational institution, parent, or local authority has reported any incident resembling the one depicted. The video also contains no spatial identifiers, no classroom markers, and no verifiable metadata. Its timestamp format matches prompt-based AI video templates that have become increasingly common across misinformation networks since mid-2025.

Why It Matters

This video is part of a growing pattern: fabricated AI content created specifically to trigger emotional responses around identity, culture, and religion. These clips are engineered to spread outrage before verification can catch up, and once the content is reposted without disclaimers, it becomes ammunition for broader political narratives. The so-called “indoctrination” storyline has become a recurring tactic in disinformation campaigns, using synthetic media to paint marginalized groups as threats inside Western institutions. By manufacturing scenarios that mimic school environments, these operations exploit one of society’s most sensitive vulnerabilities the protection of children.

CyberPoe’s Verdict

After analyzing visual, audio, contextual, and source-based evidence, CyberPoe concludes that the footage is not authentic and does not depict any real classroom event. The video is overwhelmingly consistent with AI-generated content crafted to imitate surveillance footage for the sole purpose of inflaming culture-war rhetoric. There is no proof that the incident occurred, no corroboration from official sources, and no physical markers linking the clip to any school.

This is AI-manipulated content driving a false narrative.

CyberPoe | The Anti-Propaganda Frontline 🌍