Project Info

Category

Date

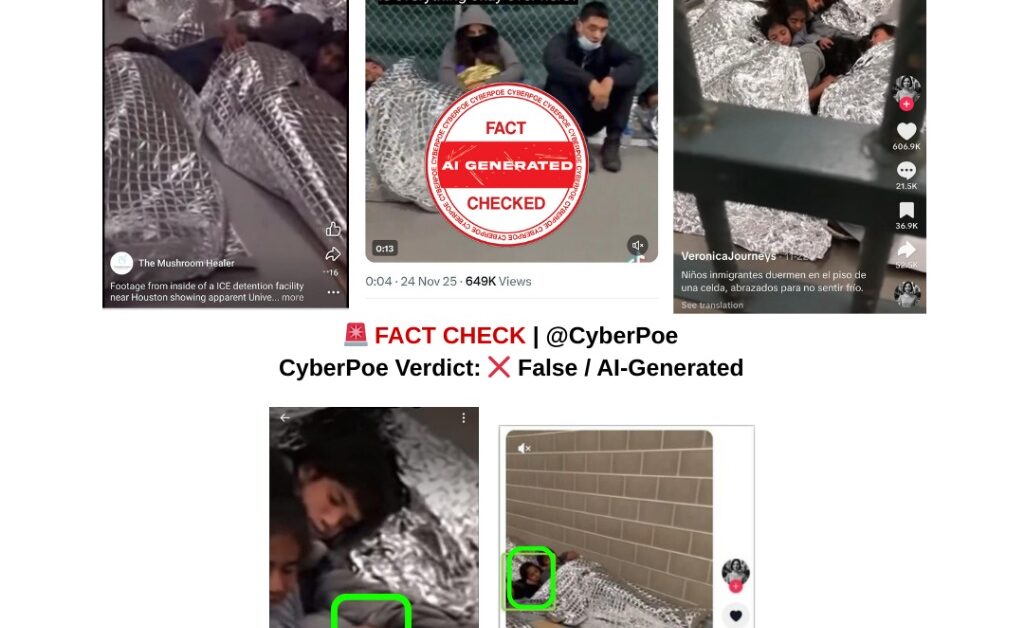

Viral ICE “Migrant Floor” Videos Are AI-Generated and Do Not Show Real Detention Conditions

Introduction: The Viral Outrage and Political Context

In late 2025, two videos portraying dozens of migrants sleeping on the bare floors of what appeared to be a US Immigration and Customs Enforcement (ICE) detention facility began circulating aggressively across X[1], Facebook[2], and Tiktok[3]. The clips surfaced at a politically charged moment, coinciding with renewed controversy surrounding President Donald Trump’s second-term mass deportation policies. These visuals, which appeared to show rows of detainees wrapped in metallic mylar blankets inside a chain-link enclosure, were framed as proof of inhumane conditions inside federal detention centers. The posts accompanying the videos carried deeply emotional captions, accusing the administration of forcing migrants including children to sleep without beds or basic necessities. Others attempted to politicize the footage from the opposite direction, falsely suggesting the visuals originated during the Obama administration. In reality, both sides were reacting to imagery that was never real to begin with.

Origins of the Videos and the First Red Flags

CyberPoe’s verification process quickly established that the videos did not originate from any official source, whistleblower leak, government transparency archive, or credible media organization. Instead, both clips bore the distinctive watermark of a TikTok account named @multiversoviral1, a page already known for regularly posting synthetic, AI-generated political scenarios. Previous content from the same account included exaggerated or fictionalized videos involving everything from global leaders to fabricated U.S. policy events. Several posts from that account were explicitly tagged with “Sora”, referencing OpenAI’s text-to-video generator. This discovery was the first definitive indicator that the footage likely had no connection to any US detention facility, nor to the political circumstances under which it was being circulated.

Forensic Analysis: AI Signatures Hidden in the Footage

A deeper forensic inquiry, conducted through CyberPoe’s verification desk and cross-referenced with AFP’s media investigations, revealed multiple technical markers confirming that the videos were synthetic. Dr. Matthew Stamm of Drexel University, an expert in digital forensics, identified statistical “fingerprints” embedded within the clips patterns characteristic of generative-AI models rather than optical footage captured by real cameras. The reflections on the metallic blankets shifted in ways inconsistent with natural lighting, suggesting a rendering

process rather than real illumination inside a facility. One detainee’s feet were positioned at anatomically impossible angles, bending in ways a human foot cannot. These are the kinds of subtle distortions that generative systems often produce because they replicate appearances rather than physical rules.

Furthermore, Professor Walter Scheirer of the University of Notre Dame observed irregularities in facial and limb formations. Background figures appeared with half-rendered faces or blurred eye structures, and one individual seemed to be missing fingers entirely. Such deformities are signature flaws produced when AI models struggle to render humans within crowded or low-light environments.

Complementing these findings, Professor Siwei Lyu of the University at Buffalo highlighted unnatural patterns of movement throughout the clips. In a real detention environment, individuals lying on cold floors under discomfort would shift, breathe visibly, or display micro-movements. Instead, the subjects in the AI-generated videos exhibited long stretches of near-motionless posture. In one segment, an arm appeared spontaneously between frames — a phenomenon unique to generative video synthesis, not human behavior or camera capture. These combined observations formed the backbone of an evidence-based conclusion: the footage was artificial.

Misrepresentation of Real Issues Through Synthetic Imagery

Although the videos themselves were fabricated, their virality was driven by their proximity to real concerns. Overcrowding, insufficient bedding, and children sleeping on concrete floors have been documented inside ICE facilities across multiple administrations — Obama, Trump, and Biden. This historical context made the synthetic footage immediately believable and psychologically potent, enabling it to spread rapidly among politically polarized audiences. Human rights activists shared the videos as proof of systemic mistreatment, while critics attempted to dismiss them as older footage attributed to previous governments. Both narratives, however, rested on visuals that never depicted real events.

The weaponization of AI-generated imagery in a politically sensitive domain such as immigration illustrates an emerging threat: synthetic media is increasingly capable of imitating real injustices and exploiting genuine public fears. This creates a dangerous cycle in which misinformation not only distorts current events but also undermines trust in legitimate documentation of real abuses.

Why This Fabrication Matters in the Broader Information Ecosystem

The widespread circulation of these fake videos highlights the accelerating challenge of deepfake and AI-driven misinformation. By mimicking commonly photographed scenes from past controversies, these AI creations transform fabricated content into convincing replicas of real policy failures. As public debate intensifies, particularly during election cycles, such synthetic imagery can inflame political tensions, misguide policy conversations, and erode public confidence in both institutions and media reporting.

In this case, the footage exploited an already polarized debate about the humanitarian conditions at US detention centers. It inserted fabricated evidence into legitimate discourse, ultimately making it more difficult for the public to differentiate fact from fiction during humanitarian crises or policy controversies.

CyberPoe Conclusion

After a full investigative review, CyberPoe concludes that the viral “migrant floor” videos attributed to ICE facilities were entirely AI-generated. They do not depict real detention centers, are not tied to Trump’s deportation policies, and cannot be used as evidence of any administration’s treatment of migrants. While immigration conditions remain a serious and documented issue, these specific videos are fabrications designed to manipulate emotions and distort political narratives.

CyberPoe | The Anti-Propaganda Frontline 🌍