Project Info

Category

Date

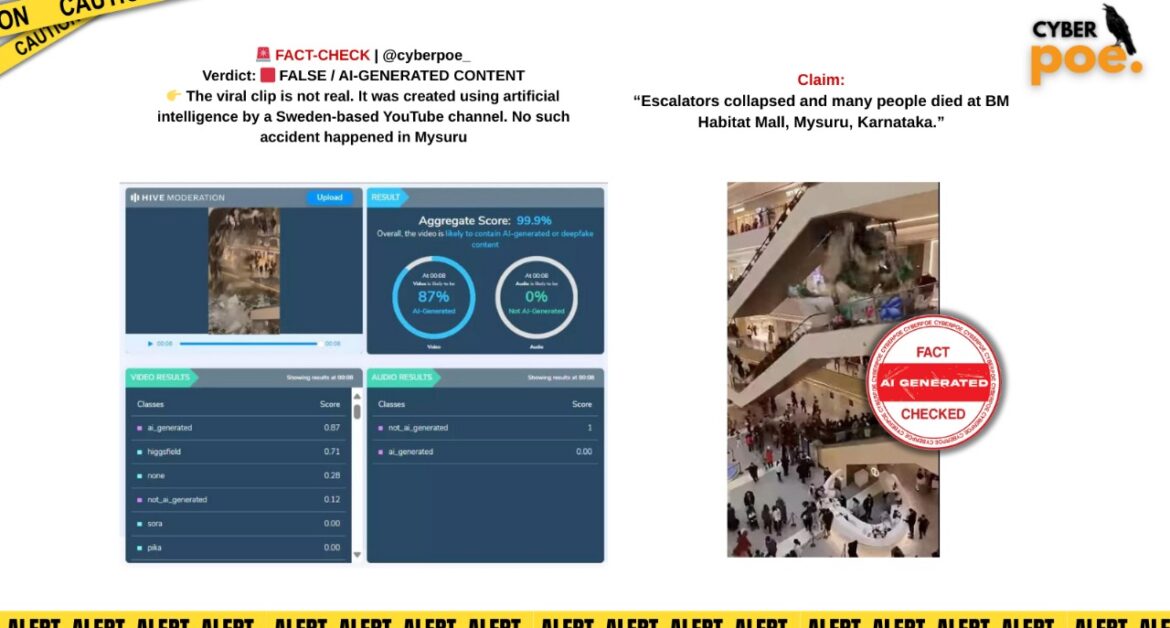

Viral Video of Escalator Collapse in Mysuru Mall is AI-Generated

In mid-September 2025, a video depicting multiple escalators collapsing inside a mall in Mysuru, Karnataka, went viral on social media platforms such as X, WhatsApp, Facebook, and TikTok. The post accompanying the video claimed that “many people died at BM Habitat Mall” due to the catastrophic incident. The dramatic visuals quickly garnered thousands of shares, provoking public panic and sparking discussions about mall safety and structural hazards. However, an in-depth investigation by CyberPoe confirms that the viral footage is completely fabricated and was artificially generated using AI technology. No such accident occurred at BM Habitat Mall or anywhere else in Mysuru

The Origin of the Viral Video

A closer analysis of the video revealed several inconsistencies typical of AI-generated content. Observers noticed anomalies such as two individuals merging unnaturally while walking, irregular physics of the escalators collapsing, and distortions in human movement that are not possible in real-world video footage. These red flags prompted a reverse search of the clip, which traced it back to a Sweden-based YouTube channel called “Disaster Strucks”, where it had been uploaded on June 10, 2025, months before the viral social media posts.

The YouTube channel explicitly mentions that the videos are artificially created for entertainment and awareness purposes. The original upload carried a clear disclaimer in its caption stating, “This is AI art, generated by me,” and the channel’s bio further emphasizes that the content consists entirely of synthetic disaster visuals. This confirms that the footage circulating on social media was repurposed and falsely presented as a real accident in India, deliberately misinforming viewers.

The Origin of the Viral Video

To verify the authenticity of the video further, CyberPoe employed the Hive Moderation AI detection tool, a widely recognized platform for analyzing synthetic and deepfake media. The analysis returned a 87% probability that the video is AI-generated, leaving no doubt about its artificial nature. Such AI-generated visuals are increasingly sophisticated, often blending realistic textures, lighting, and motion, making them appear authentic to casual viewers. The escalating sophistication of AI deepfakes underscores the importance of critical scrutiny before sharing viral disaster content online.

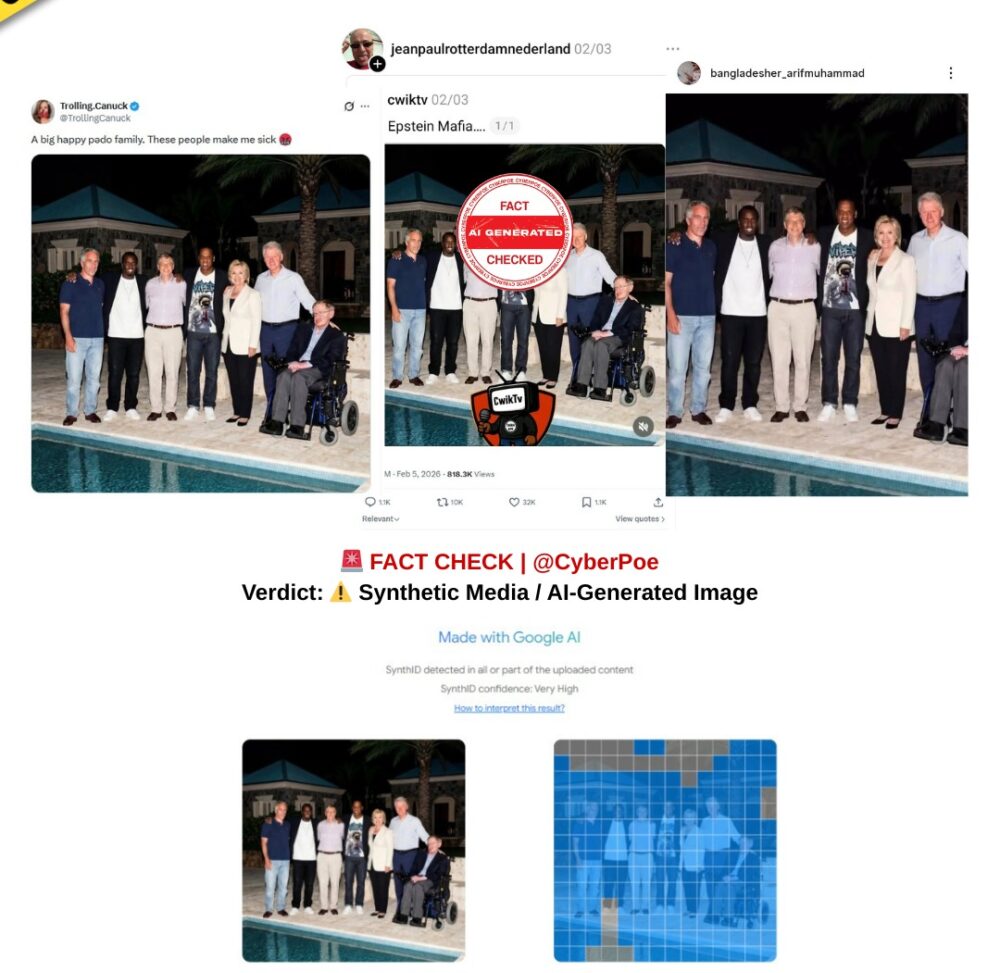

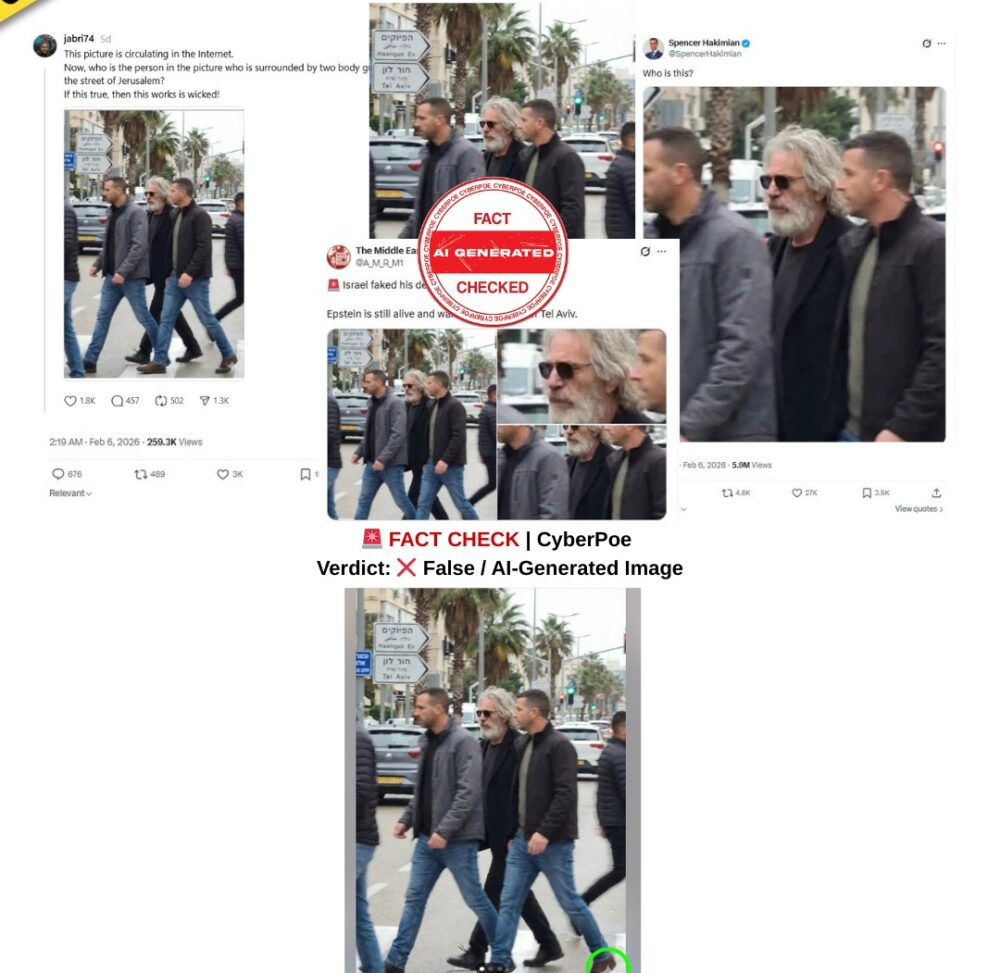

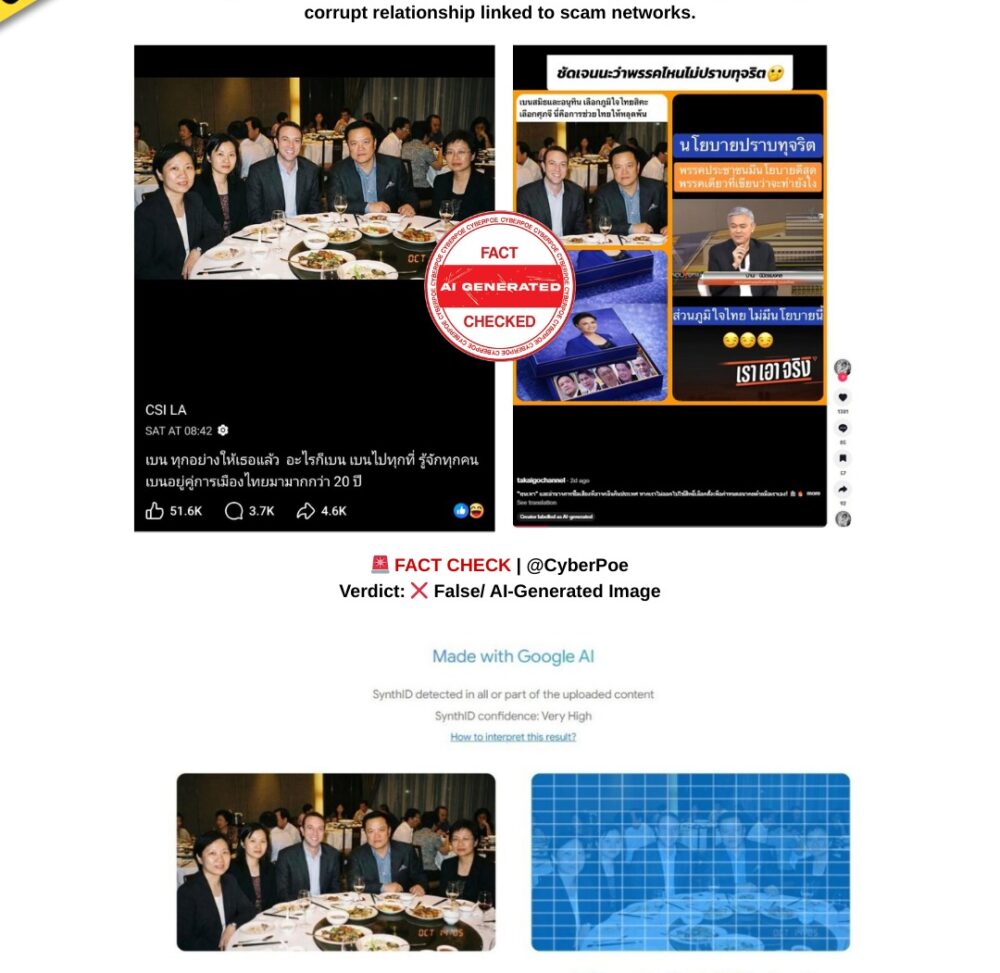

Spread of Misinformation

The viral video spread rapidly across multiple social media platforms. On X, verified and unverified accounts shared the clip with sensational captions claiming mass casualties at BM Habitat Mall. WhatsApp forwards amplified the misinformation by labeling it as “breaking news,” creating unwarranted panic among the public. Facebook and TikTok pages also circulated the video to increase engagement, clicks, and ad revenue. Analysts note that these posts deliberately exploited the dramatic visual content to manipulate audience emotions, a common tactic in online disinformation campaigns.

Real-World Implications

The circulation of AI-generated disaster videos such as this one has serious consequences. Firstly, they can provoke public panic, leading to unnecessary distress and potentially overwhelming emergency services with false alarms. Secondly, such videos may harm the reputation of institutions or locations falsely depicted as the site of disasters, in this case, BM Habitat Mall in Mysuru. Thirdly, repeated exposure to synthetic disaster visuals contributes to a general mistrust of news media, making it harder for people to discern real emergencies from fabricated events.

In India and globally, AI-generated deepfakes and synthetic media are increasingly being weaponized to create false narratives. CyberPoe emphasizes that while AI technology has many positive applications, its misuse for generating fictitious disaster events poses significant risks to public perception, media credibility, and social stability.

How to Verify and Stay Safe

Users encountering dramatic or viral disaster videos online should take several precautionary steps:

- Check the source: Verify whether the video comes from credible media outlets or official authorities.

- Look for anomalies: AI-generated content often contains visual glitches, unusual merges of human figures, or unrealistic physics.

- Use reverse search: Tools like Google Reverse Image Search or InVID can trace the original upload and reveal whether the video is genuine or recycled content.

- Consult fact-checking platforms: Websites like CyberPoe provide verified information and analysis of viral content to prevent the spread of misinformation.

Conclusion

The viral video showing escalators collapsing in BM Habitat Mall, Mysuru, is 100% AI-generated and entirely fictitious. There has been no reported incident matching the video’s description, and authorities in Mysuru have not issued any alerts or statements regarding escalator accidents in local malls. The footage originated from a Swedish YouTube creator and was repurposed online to mislead viewers.

CyberPoe urges the public to exercise caution and critical thinking when consuming and sharing videos of disasters or emergencies. In an era of AI-generated content and deepfakes, verification before sharing is crucial to prevent panic, misinformation, and reputational harm. Always rely on official sources and verified fact-checking platforms before believing or amplifying viral content.

CyberPoe | The Anti-Propaganda Frontline 🌍